Psychoacoustics: how perception influences music production

With a basic understanding of how humans interpret and react to sound, you can create more satisfying mixes that play on the experience of listening—known as psychoacoustics. We look at several examples for music production.

Hang around with audio engineers long enough and someone will bring up the concept of psychoacoustics. If you’re a beginner, you might not know what the term “psychoacoustics” means, and you might be too scared to raise your hand and say, “What is psychoacoustics?”

In this article, we'll explain psychoacoustics in music, and give you examples of how it can be used effectively in your tracks.

What is psychoacoustics?

Though we can measure sound with meters and spectrum analyzers, how we experience it is a matter of human perception—otherwise known as the field of psychoacoustics.

Even if you aren’t aware of the term, you likely engage with psychoacoustic principles in music production on a regular basis—for example, manipulating an unusual sound source to be heard as a conventional instrument. With a basic understanding of how humans interpret and react to sound, you can create more satisfying mixes that play on the experience of listening, independent of software and equipment. Let’s take a look at some examples.

If I pan it over to the left, you will hear it in your left ear.

Sax panned left

However, if I take this mono signal, make it stereo with iZotope’s Relay plugin, and delay the right side by 7 milliseconds, the sound will also lean leftwards:

Sax delayed for leftward appearance

Yes it sounds different from strict panning, but the sound arrives in your left ear first, arriving in your right ear 7 milliseconds later. As long as you have two working ears and a properly functioning playback system, the sound will lean left, regardless of whether you’re listening in headphones or speakers.

This is a psychoacoustic principle in action: if sound arrives in the left ear first, you will position it leftwards in space.

Limits of hearing

The best place to start with psychoacoustics is to get familiar with the limits of human hearing. You probably already know that we can hear sounds within a range of 20 Hz to 20 kHz (20,000 Hz), with the upper limit decreasing to around 16 kHz with age. Noise-induced hearing loss and tinnitus will impact the perception of sound too, and for producers with these conditions, workarounds need to be developed to achieve balanced mixes.

Due to our hearing limits, you may find that high-passing frequencies around 30 Hz brightens a mix by removing unimportant low-end information that is hard to perceive, although this is not always the case. Increasing that filter to 50–60 Hz, while reducing the high-end to 10–12 kHz, will make mixes and instruments sound “lo-fi,” replicating the poor frequency response of old recording technology.

Read about the evolution of frequency response in popular records from the 1920s to today here.

Sound sensitivity

In modern music, the key to achieving a pleasing mix is an even balance of frequencies across the spectrum. While simple in theory, this is often a challenge to pull off since our ears do not perceive all frequencies equally, specifically in the high mid-range (between 2500–5000 Hz) where we are most sensitive.

This is the main reason behind the commonly used “smiley face” EQ curve, where the mids are scooped out and the lows and highs are boosted. At low levels, a broad bass and treble boost will make a mix sound more balanced and powerful, but negatively impact dynamic range and even introduce distortion, a classic case of louder sounding better.

As we crank up playback level, our ears’ frequency response across the spectrum begins to even out, and some of the issues introduced by our selective ears are eliminated. But, as you know, producing at high levels for extended periods of time is both damaging to our ears and misleading, since it makes us think every instrument is upfront in the mix. This becomes evident when levels are turned down and what we’re working on seems out of control.

Psychoacoustics and immersive audio

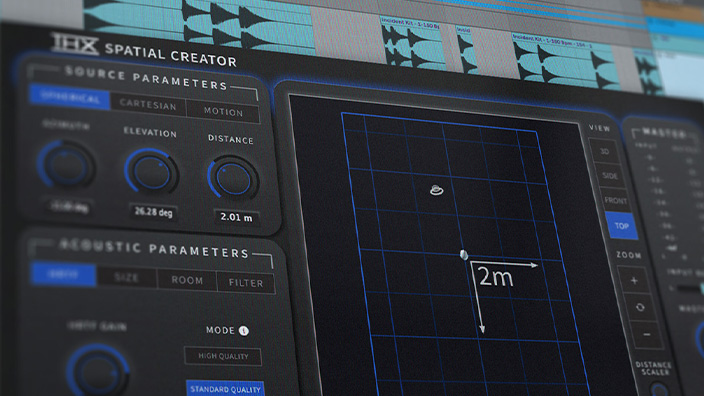

One could argue that the modern craze around spatial audio is made possible entirely by psychoacoustics: researchers have discovered how to make you feel audio in three dimensions (up/down, left/right, front/back) when you wear headphones. This research has powered the binaural and spatial-audio engines of Atmos rendering, making it possible for listeners to enjoy a more immersive mix in headphones.

But even in simple stereo formats, we can create all sorts of psychoacoustic effects with four simple tools: a fader, a panner, an EQ, and a time-based effect (delay or reverb). I will now provide examples of how to manipulate these tools to achieve psychoacoustic effects in simple stereo mixes.

2 ways to use psychoacoustics in your productions

1. Make a sound fade into the background with automated EQ and verb

Have you ever wanted a track to lurk deep in the background of the sonic space you’re creating?

Think of a movie where a character walks into the foreground from the background. Is that an effect you’ve tried to achieve?

If you have tried to make that happen, you may have noticed something: turning the sound down in volume isn’t enough to achieve this effect. In terms of psychoacoustics, merely adjusting a volume control doesn’t really sell the brain on background/foreground imagery.

To get there, you have to rely on EQ and time-based effects. I’ll explain now:

As a sound gets further away from you, yes, it gets quieter—but in the real world, as it gets quieter, it also takes on a different tonal profile, a different EQ signature. Observe this graph:

Equal loudness curve

This is a depiction of an equal loudness curve as it gets louder and louder. The vertical axis is dB SPL, and the horizontal axis represents frequencies. As the signal gets louder, we perceive more of the high frequencies and low frequencies of the signal. As it gets farther away, we tend to hear less of the lows and highs.

Remember that as a sound moves farther away, it also takes on more aspects of its surrounding environment. It’s not a bad idea to introduce a time-based effect into the mix—something like Neoverb.

Neoverb

2. Make a sound feel as though it’s coming from outdoors with delay

Let’s say we have a vocal and guitar playing at the same time:

Now, for the sake of argument, let’s say we want to give the listener the feeling that this song is being played in a forest. In fact, let’s put some forest sound design into the arrangement, just for the fun of it.

It sounds like we’ve pasted Pete Mancini and his acoustic guitar on top of a forest bed. Pete doesn’t sound like he’s in the environment.

You might think a reverb would help us really sell the effect on a psychoacoustic level, but that isn’t the case for outdoor locations. Yes, fantastic verbs like Exponential Audio’s Stratus give us exterior presets, such as this one:

Stratus

However, relying on this verb by itself won’t sound particularly real:

Strange as it is to say, you won’t find reverbs of much help when recreating the great outdoors. Reverb is designed to give us the feeling of reflections bouncing off of room walls. There are no walls in the forest.

What you do notice, if you raise your voice in a forest, is that the louder you sing, the more of an echo—or slapback delay—you’ll hear on your voice. This effect can be recreated psychoacoustically in a DAW to great results.

Here’s how I’ll go about doing it. First, I’ll send the guitar and the vocals to an aux track with a downwards-expander on it. In this case I’ll be using the expander in the bx_console SSL 9000J plug-in:

Expander

Hear how the signal crescendos into loudness, depending on how hard Pete hits his guitar, or how loud he sings? Notice how it’s all relatively smooth? That’s what the bx_console 9000J can get you.

Next, we’ll feed this into a delay, one with independent times and filters for the left and right channels:

bx_delay 2500

Now that we’ve got our delay figured out, we can edge this track into the mix, alongside a smidge of that stratus setting I showed you earlier. The results are much more convincing:

Unmasking instruments

The more sounds we add to a mix, the harder it is to separate them, and the more frequency masking occurs. This is particularly noticeable between instruments that share similar frequencies—if a kick and bass note occur at the same time, one will mask parts of the other, sometimes to the point of being inaudible.

Masking is one of the most common psychoacoustic phenomena, and is present in all mixes, demos, and polished masters. But too much of it may be undesirable. To reduce masking in a production or engineering context, we use EQ to carve out a unique space in the spectrum for each element in a mix. Some of this work can also be taken care of at the writing and arrangement stage by choosing instrument and notes that don’t reach over one another.

Even if you take these precautions, we often add and remove song parts throughout the course of a mix, shifting harmonic structure and causing masking issues to surface. For this reason, a quick solution to solve masking is required for efficient workflow. That’s why we came up with the Unmask feature in Neutron, which reveals competing frequencies between two tracks in a single window, and allows you to EQ them independently or with an inverse curve, meaning a cut in one EQ will have a complementary boost in the other.

The Unmask feature in Neutron gives you a big picture view of your mix with an unbiased perspective, along with control over the smallest details, so you can more easily resolve common audio perception problems and stay focused on the music.

Spatial location

Having two ears instead of one allows us to more accurately determine the location of sound. At a crowded party, localization tells us which direction people are speaking to us from. It also provides cues as to whether traffic is moving toward us or away from us, and where our keys are hiding in our jacket. In a music production context, we place sounds at various spatial locations to achieve a sense of mix width and depth.

Width is the stereo field from left to right. A key psychoacoustic principle used to achieve the illusion of width is the Haas effect, which explains that when two identical sounds occur within 30 milliseconds of one another, we perceive them as a single event. Depending on the source material, the delay time can reach 40 ms.

I wager the most common application for this effect is on vocals. To create a stereo vocal in a pop chorus, we duplicate the mono lead, add a slight delay to the copy, then pan each part in opposite directions. In addition to opening up the center of a mix for other sounds, this move allows the listener (and producer) to perceive anthemic vocal width from a single mono source. Our free Vocal Doubler plugin is based on this exact concept.

With a short delay times between 5–15 ms between two identical sounds, you will notice some funny metallic sounds that occur as a result of the signals going in and out of phase with each other—this comb filtering effect is the underlying concept for audio processors like chorus, flangers, and phasers, explored in depth in the linked article.

Long delays in the range of 50-80 ms break the illusion, and the second sound will be perceived as an echo. This is rarely a desired effect for pop, but can produce some psychedelic and disorienting moments in more experimental music.

Depth, the front-back space in a mix, is a trickier concept to navigate, but once again, we have psychoacoustic principles as a guide. If loud and bright sounds appear closer, we can push sounds further away by rolling off low and high frequencies—essentially flipping the “smiley face” curve upside down.

This trick works because it mimics how a sound wave travels in the natural world—the further it goes, the more it’s high frequencies are absorbed by the air, until it disappears completely. Removing some low-end enhances this illusion in a DAW. Think of this next time you shout into a canyon or large open space.

Experiment!

I’ve just given you ways to harness the power of psychoacoustics in your work. But there are thousands of ways to utilize psychoacoustic techniques. I would advise you, at this point, to go outside and listen to the world around you. Focus your ear on any specific sound that you hear—it could be a car passing by, or the wind rustling the trees. Literally any sound will do.

Focusing on the sound, ask yourself how you know it’s moving. Is the movement a function of panning, delay, volume, tonal differences—or all of the above? Remember that all the tools we have boil down to manipulations in level, frequency, direction, and time: try to boil the sounds you hear into these categories.

Then, go home and try to recreate the movement of the sound you heard in your DAW. Use sound effects from freesounds.org if you like, or build them from scratch. You will learn a lot about psychoacoustic phenomena this way.