iZotope

Artists

At iZotope, our artist community isn’t just limited to performers; it also includes engineers, sound designers, producers, and more—anyone consistently using our products in an extraordinary or notable way.

MASTERING ENGINEER

Emily Lazar

GRAMMY Award winner and eight-time nominee, Emily Lazar is one of the most respected mastering and mixing engineers in the world...

RECORD PRODUCER

Greg Kurstin

Greg Kurstin is a songwriter, producer and multi-instrumentalist who has had his hand in many hits over the last decade...

SONGWRITER/MUSICIAN

Kimbra

New Zealand-born, GRAMMY-winning pop star Kimbra is a musical force, innovating the shape of pop today as a songwriter, musician...

RECORD PRODUCER

Larry Klein

Multiple GRAMMY-winning producer, songwriter, and bass player / musician Larry Klein has been nominated for Producer of the Year...

POST-PRODUCTION ENGINEER

Lora Hirschberg

Lora is a re-recording mixer at Skywalker Sound, with over 150 film credits, including a variety of independent and documentary films...

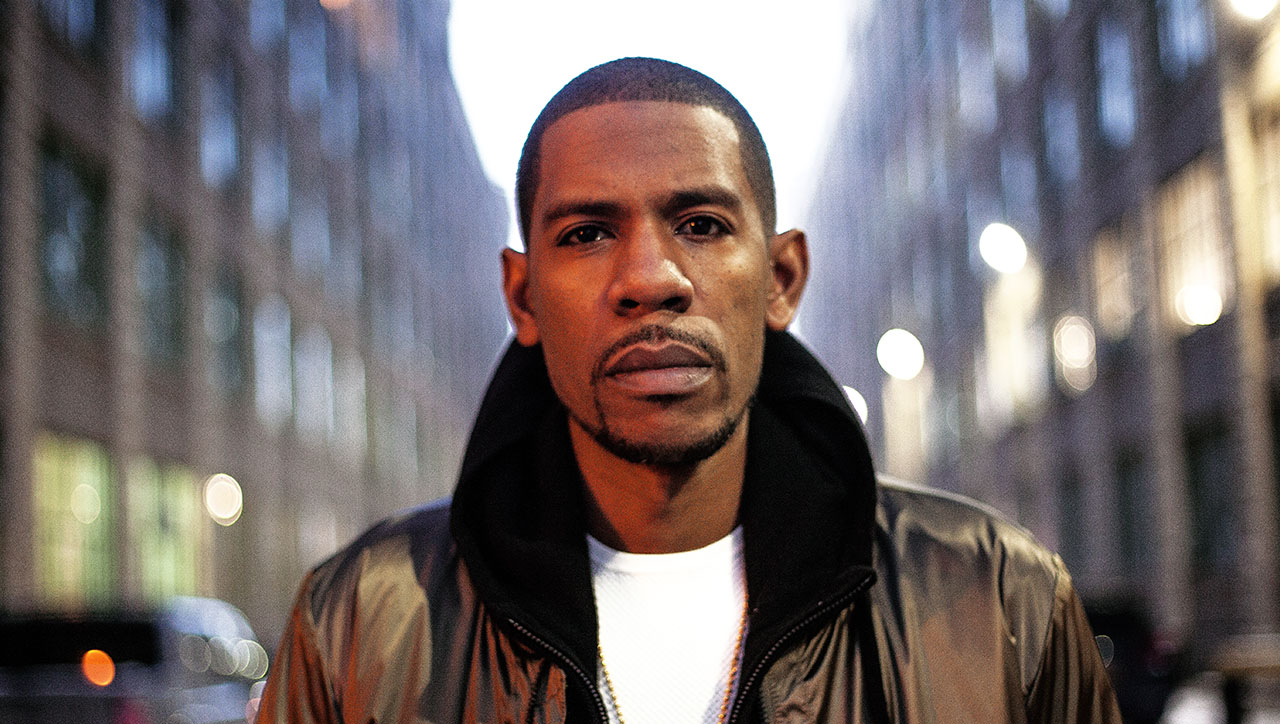

ENGINEER/PRODUCER

Young Guru

Called "the most famous and successful engineer in the history of hip-hop” by the Wall St Journal, Young Guru is a GRAMMY-winning engineer...