Redefining the rules of mastering: an inside look at Ozone 12’s groundbreaking features

Go behind the scenes with the iZotope Research Team to discover the innovative technology behind Ozone 12’s new IRC 5 Maximizer, Unlimiter, and Stem EQ.

For over two decades, iZotope Ozone has been a leader in mastering technology, trusted by engineers and producers to bring their tracks to life. With the launch of

Ozone 12 Advanced

We dive into the groundbreaking technology behind some of the new modules – the IRC 5 Maximizer mode, the industry-first Unlimiter, and Stem EQ – to get a glimpse into how Ozone is redefining what's possible in music production.

Principal Research Engineer Alexey Lukin and Senior Research Engineer Johannes Imort

The evolution of loudness: a deeper dive with Alexey Lukin

Alexey Lukin, Principal Research Engineer at iZotope, discusses the development of the new IRC 5 Maximizer mode, an advanced algorithm designed to help users achieve a louder, clearer master without the usual trade-offs.

Ozone 12 Maximizer IRC 5 mode

"We wanted to use our most advanced DSP that runs a psychoacoustic model to predict the audibility of Maximizer distortion – all within a multiband design. The R&D team had to deal with serious CPU and latency demands of IRC 5 and optimize the design for real-time performance." - Alexey Lukin

You worked on the new IRC 5 limiting mode for the Maximizer. Can you tell us about the specific improvements and how the new algorithm helps users achieve a louder, clearer master without the usual trade-offs of an overworked limiter?

Alexey: IRC 5 is the first multiband limiter algorithm in Ozone. For over two decades our research team has perfected the sound of Ozone Maximizers. Yet, all of them were a single-band design. With this design, a Maximizer is a peak limiter that applies a variable gain envelope to the signal.

There is a lot of fine craft in computing this gain envelope. A limiter has to be fast enough to react to signal transients and true peaks without causing pumping on the sustained tones. At the same time, the limiter has to be slow and gentle on the sustained tones to prevent audible distortion and modulation. This kind of balancing is achieved by Ozone’s Intelligent Release Control technology.

Between IRC 1, 2, 3, and 4 modes, the Maximizer was tuned to react to signal features like a crest factor, ratio of tonal and noisy components, and perceived distortion. However there was always a limit to this approach: heavy bass notes inevitably affected higher-frequency sounds, because they shared the sample gain envelope. This caused either pumping or distortion that we needed to carefully balance, depending on the user’s setting of Character which affected the overall ballistics.

In IRC 4 mode, we introduced a spectral shaper to tame the most problematic frequency components and reduce their effect on other frequency ranges. However it was not until IRC 5 that we introduced a truly multiband design. Now the Maximizer can reduce the modulation and pumping by applying different gain envelopes in four frequency bands. The bands that contribute the most to overall peak levels get to be attenuated first, clearing up the headroom for other bands. Can this produce a tonal shift? Yes, and the amount of it has been carefully balanced with benefits from the lack of pumping or intermodulation.

Having the freedom of operating in four frequency bands also means that different attack and release times can be used in different frequency ranges – something that would not be possible with single-band designs. iZotope’s Sound Design team has optimized the response of limiters across frequencies with a goal of producing a louder, cleaner master while still retaining a user control over the sound character.

Ozone 12's new IRC 5 limiting mode is described as the "most advanced algorithm yet" for the Maximizer. What were some of the biggest technical challenges you faced while developing this new algorithm, and how did your team overcome them?

Alexey: We wanted to use our most advanced DSP that runs a psychoacoustic model to predict the audibility of Maximizer distortion – all within a multiband design. The R&D team had to deal with serious CPU and latency demands of IRC 5 and optimize the design for real-time performance.

Making IRC 5 work with all the advanced features of Ozone Maximizer, like True Peak limiting, Transient Emphasis, and independent Transient/Sustain stereo handling, was an engineering challenge on its own. At some point we considered dropping the Transient Emphasis feature for IRC 5 in order to reduce the CPU load and latency, but after receiving reassuring beta team feedback we decided to keep the option.

How do you approach the task of innovating on a feature like the Maximizer, which is a fan-favorite from past versions of Ozone?

Alexey: It’s been a mix of our own ideas and adopting best practices from the industry. When we first came up with Intelligent Release Control technology in 2003 for Ozone 3, our aspiration was to give our users a flexibility of control together with a top-notch sound quality. We felt it important to give our users the ability to vary the Maximizer ballistics (which we called Character) in a wider range, allowing engineers to use it as a soft clipper to extend their sonic palette.

Following IRC 1, the designs of IRC 2 and 3 used a more complex spectral analysis to determine the best way to handle different parts of the signal. Our patented method of using a psychoacoustic model has allowed us to jump from a heuristic control of the attack/release time to a more optimal and universal method that drove the success of IRC 3.

When a BS.1770 true peak measurement standard was adopted, our R&D team analyzed it and identified some degree of variability between compliant measurement software. We came up with a way to make Ozone Maximizer more compliant with different meters and published an AES article on that.

During our work on Ozone we’ve been fortunate to take advice from esteemed mastering engineers and educators like Jonathan Wyner and Bob Katz, as well as many others on our beta team. We are thankful for their contribution to Ozone’s continued success!

Unlocking the impossible: A conversation with Johannes Imort

Senior Research Engineer Johannes Imort shares how iZotope is using machine learning to create tools that can “undo” previous processing, and how this technology is at the core of Ozone 12.

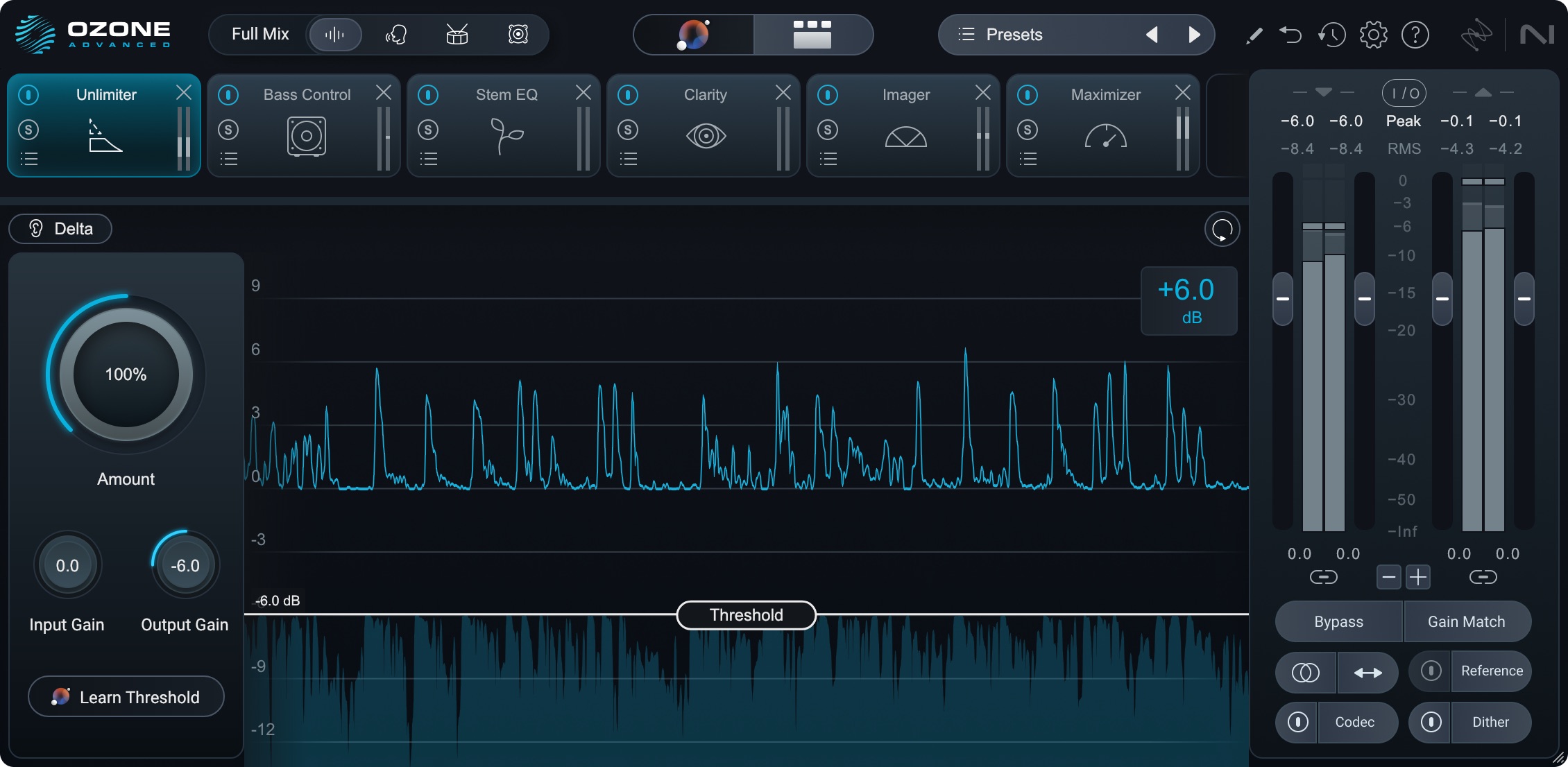

Ozone 12 Unlimiter module

"We didn’t want a black-box AI magic button. Unlimiter lets you set the threshold at which it engages and the overall amount of restoration, so you can decide how assertive it should be. That respects iZotope's philosophy: the artist and engineer stay in charge; the AI is just a helper to get there." - Johannes Imort

The new Unlimiter tool is an industry-first and is described as an "undo button" for overly compressed audio. How does the machine learning technology behind Unlimiter work to transparently restore dynamics and reintroduce lost transients?

Johannes: Undoing audio effects might sound counter-intuitive at first: Why reverse something a mixing or mastering engineer did on purpose? But in practice, you might come across material that’s been pushed hard, or you want to remaster an old recording.

A number of research efforts have looked at inverting audio effects, and the new Unlimiter module draws inspiration in particular from the paper “Music De-Limiter Networks via Sample-Wise Gain Inversion” by Chang-Bin Jeon and Kyogu Lee. This approach reframes limiting as a sample-by-sample gain curve that can be inverted. In other words, if a limiter reduces the signal’s gain at the moments it gets too loud, a neural network can learn to predict the opposite gain and restore the waveform. Their work demonstrated that inverting the limiter’s hidden gain can restore dynamics surprisingly well.

For Ozone 12 we had to make all of this work in real time, inside a DAW, on the CPU, with low latency. That matters because mastering engineers need a plugin that feels instant: you hit play, and each incoming buffer (typically a few milliseconds of audio) must be processed before the next one arrives. In practical terms, that means streaming inference: a stateful neural network processes short chunks, carries its internal state forward between buffers, and finishes its computation within the user’s audio buffer deadline so the music never hiccups.

We do use a quite different model architecture than the paper, but we follow the same sample-wise gain inversion idea. During training the network hears lots of short examples where we know both versions: the limited input and the reference without limiting. Over time it learns two things: (1) where limiting actually happened (those flattened peaks and clipped transients), and (2) how much expansion is plausible to rebuild the audio. If you prefer an intuitive picture: the model learns a super-fast, musical “fader ride” that lifts only the places the limiter pushed down, while leaving the rest completely untouched. Keeping the unlimited parts of the audio strictly unprocessed is another example where we extended the approach from the paper, so that the Unlimiter only touches what needs to be touched.

Because it’s been exposed to a wide variety of material, it generalizes well across genres. There are still edge cases (e.g., we did not focus on severely brick-walled or distorted sources) but the trajectory is promising. I can imagine future versions tackling even more extreme limiting, compression, and distortion (and that might be a great fit for RX restoration workflows).

One more design goal was control. We didn’t want a black-box AI magic button. Unlimiter lets you set the threshold at which it engages and the overall amount of restoration, so you can decide how assertive it should be. That respects iZotope's philosophy: the artist and engineer stay in charge; the AI is just a helper to get there.

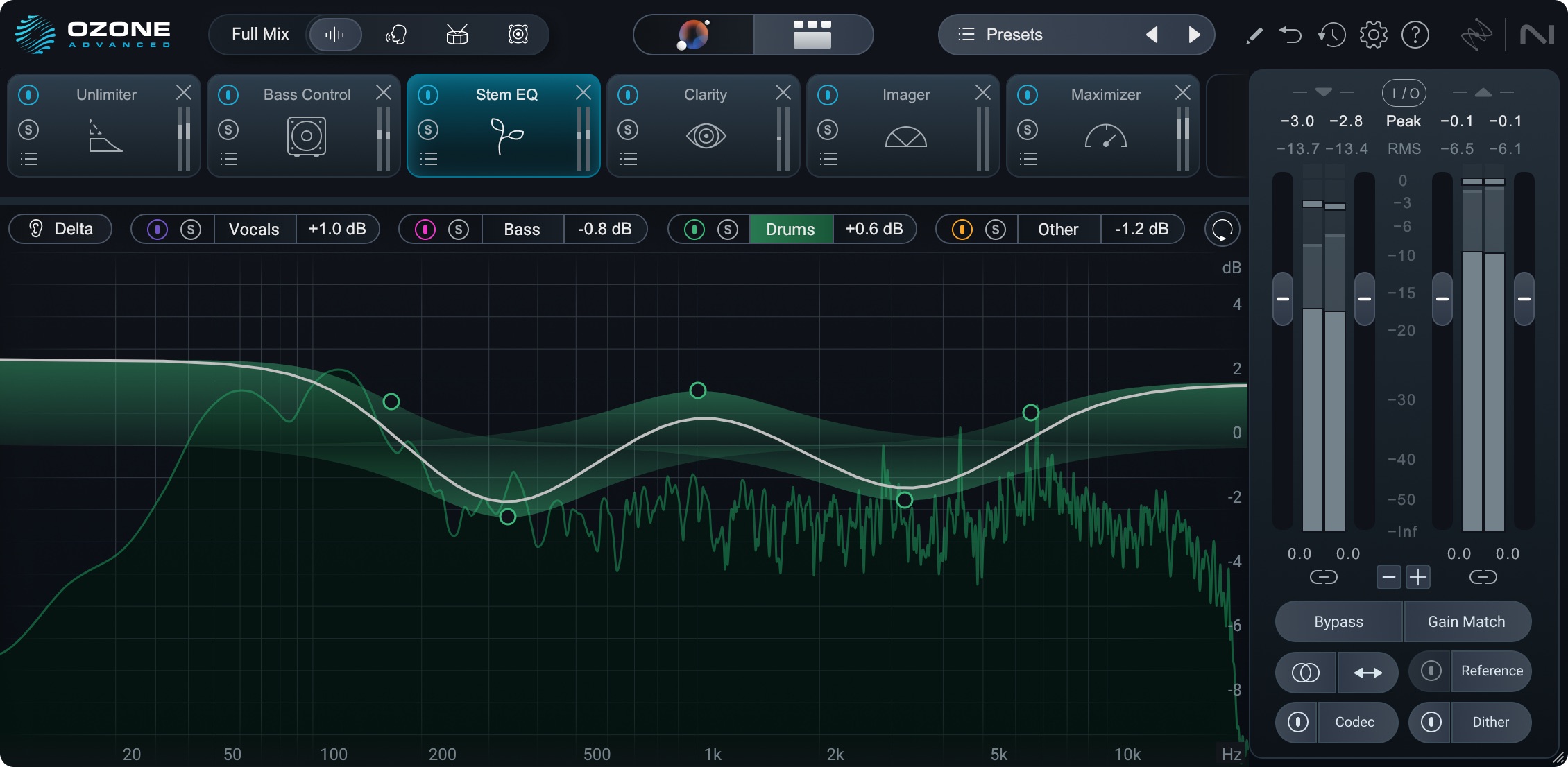

Ozone 12's Stem EQ tool allows users to separately EQ vocals, drums, bass, and other instruments from a stereo file. Could you elaborate on the new neural nets that power the improved Stem Focus modes and how they offer more control with fewer artifacts?

Johannes: Stem EQ builds on real-time, low-latency, phase-aware music source separation. Earlier Ozone features like Stem Focus and Master Rebalance were among the first commercially available tools to do this live in a DAW: Historically, we ran a compact model per source (vocals, bass, drums), and you couldn’t separate all four stems simultaneously. Our new system processes multiple stems jointly with a much more modern architecture and heavy optimization, so quality goes up. The new model has much clearer isolation, better transient handling, fewer artifacts, and the latency stays DAW-friendly.

Ozone 12 Stem EQ module

On top of that, we integrated an EQ for each stem, giving surgical control over the individual musical elements within a master. I think Stem EQ is a nice example of modern machine learning playing well with classic DSP. The separator gives you handles on musical stems; a traditional EQ then does exactly what it’s great at: precise, predictable tonal shaping!

If you zoom out, the research in music source separation moved fast: open-source work like Open-Unmix (Stöter, Uhlich, Liutkus, Mitsufuji) and Deezer’s Spleeter pushed spectrogram-masking approaches around 2019 into the mainstream, and then waveform and hybrid models like Demucs (Alexandre Défossez and colleagues) raised the ceiling again. That community effort, plus competitions like the Sound Demixing Challenge, really advanced the field, and many of those teams shared code and models, which helped everyone.

Real-time, however, is an underrepresented topic so far: You must run efficiently, in short buffers, with causal or lightly look-ahead architectures; very similar constraints to Unlimiter. Moreover, for our purposes, the model needs to be mixture-consistent: the separated stems must sum back to the original input mixture, ensuring that nothing from the input mix is lost.

This new technology for stem separation and Unlimiter seems to be at the core of Ozone 12's theme of "doing the impossible" and allowing creators to do things that were not possible before. What was the development process like for these innovative tools, and what do you hope users will be able to accomplish with them?

Johannes: Being both a researcher and a musician means I’m constantly diving into machine learning and signal-processing papers, while at the same time looking for ways to translate those ideas into tools that matter in practice. We had some inspiring ideation sessions with the Ozone team (shoutout to Bill Podolak) where we mapped recent research to concrete user problems Bill had identified previously. From there we arrived at a shortlist of features that could genuinely improve workflows.

With Unlimiter, the goal was to give mastering engineers a practical tool for those cases where material arrives already heavily processed. A controllable dynamics-restoration module can bring back flexibility and punch when it’s needed. Stem EQ felt like the natural next step after Master Rebalance: with the newest architectures and a lot of proprietary engineering, full real-time multi-stem control became a reality. Before deep development, of course, we asked users and beta testers to rank those ideas among many others. Unlimiter and Stem EQ clearly resonated, they were the top ML-powered features, so we went all-in.

Turning those ideas into reality is a long, iterative research process. It means reading literature, identifying promising research directions, and then running many experiments: optimizing and comparing architectures for real-time inference, designing new ones when existing approaches didn’t fit, training models over and over, measuring trade-offs, and shaving down latency bit by bit. A huge part of the work is making models faster and leaner without sacrificing quality, so they behave reliably inside a DAW. That cycle of innovation and optimization is what eventually made both Unlimiter and Stem EQ possible.

One of my early explorations, building a second pair of artificial ears that are precise enough so that they can tell you when a master is done, is still on my wish list. Maybe another time! I’m really happy with how the modules came together, not just technically under the constraints mentioned above, but also thanks to the great collaboration on design and integration with the Ozone team, and I hope people will find them genuinely useful in their work.

The future of mastering is in your hands

The conversation with Alexey Lukin and Johannes Imort highlights how Ozone 12 pushes the boundaries of mastering through a combination of cutting-edge research and user-focused design.

We learned that the new IRC 5 Maximizer is the first in the Ozone series to use a multiband design, which allows it to apply different gain envelopes to four frequency bands for a louder, cleaner master. The Unlimiter and Stem EQ tools, powered by advanced machine learning, are designed to solve previously unfixable problems in audio.

Unlimiter acts as an undo button for over-compressed audio, while Stem EQ provides unprecedented control by allowing users to EQ specific stems like vocals or drums within a single stereo file.

This all aligns with iZotope’s core philosophy: empowering creators by providing intelligent tools that guide, but never take away creative control. With Ozone 12, producers and engineers have powerful new ways to realize their sound and bring tracks to life.